Tag: Machine Learning

Artificial Intelligence and other advanced technologies are now being used to make decisions about everything from family law to sporting team selection. So, what works and what still needs refinement?

Also, they’re very small, very light and very agile – they clap as they flap their wings. Biologically-inspired drones are now a reality, but how and when will they be used?

To Neguine Rezaii, it’s natural that modern psychiatrists should want to use smartphones and other available technology. Discussions about ethics and privacy are important, she says, but so is an awareness that tech firms already harvest information on our behavior and use it—without our consent—for less noble purposes, such as deciding who will pay more for identical taxi rides or wait longer to be picked up.

“We live in a digital world. Things can always be abused,” she says. “Once an algorithm is out there, then people can take it and use it on others. There’s no way to prevent that. At least in the medical world we ask for consent.”

Maybe the inclusion of a personal devise changes the debate, however I am intrigued by the open declaration of data to a third-party entity. Although such solutions bring a certain sense of ease and efficiency, I imagine they also involve handing over a lot of personal information. I wonder what checks and balances have been put in place?

- four optical character recognition libraries to make the text searchable.

- named entity recognition and parts of speech to establish co-references.

- n-grams to identify dates, people, locations and correlations.

This provides an insight into the “messy, dirty truth of data science” and machine learning. You can find more information here.

Make ReCaptcha’s “I’m not a robot” text more accurate – strugee/recaptcha-unpaid-labor

I think there’s a lot to say about machine learning and the push for “personalization” in education. And the historian in me cannot help but add that folks have trying to “personalize” education using machines for about a century now. The folks building these machines have, for a very long time, believed that collecting the student data generated while using the machines will help them improve their “programmed instruction” – this decades before Mark Zuckerberg was born.

I think we can talk about the labor issues – how this continues to shift expertise and decision making in the classroom, for starters, but also how students’ data and students’ work is being utilized for commercial purposes. I think we can talk about privacy and security issues – how sloppily we know that these companies, and unfortunately our schools as well, handle student and teacher information.

But I’ll pick two reasons that we should be much more critical about education technologies.

Anytime you hear someone say “personalization” or “AI” or “algorithmic,” I urge you to replace that phrase with “prediction.”

Ready or not, technologies such as online surveys, big data, and wearable devices are already being used to measure, monitor, and modify students’ emotions and mindsets.

For years, there’s been a movement to personalize student learning based on each child’s academic strengths, weaknesses, and preferences. Now, some experts believe such efforts shouldn’t be limited to determining how well individual kids spell or subtract. To be effective, the thinking goes, schools also need to know when students are distracted, whether they’re willing to embrace new challenges, and if they can control their impulses and empathize with the emotions of those around them.

Something that Martin E. P. Seligman has discussed about in regards to Facebook. Having recently been a part of demonstration of SEQTA, I understand Ben Williamson’s point that this “could have real consequences.” The concern is that all consequences are good. Will Richardson shares his concern that we have forgotten about learning and the actual lives of the students. Providing his own take on the matter, Bernard Bull has started a seven-part series looking at the impact of AI on education, while Neil Selwyn asks the question, “who does the automated system tell the teacher to help first – the struggling girl who rarely attends school and is predicted to fail, or a high-flying ‘top of the class’ boy?” Selwyn also explains why teachers will never be replaced.

Technology is starting to behave in intelligent and unpredictable ways that even its creators don’t understand. As machines increasingly shape global events, how can we regain control?

Our technologies are extensions of ourselves, codified in machines and infrastructures, in frameworks of knowledge and action. Computers are not here to give us all the answers, but to allow us to put new questions, in new ways, to the universe

This is a part of a few posts from Bridle going around at the moment, including a reflection on technology whistleblowers and YouTube’s response to last years exposé. Some of these ideas remind me of some of the concerns raised in Martin Ford’s Rise of the Robots and Cathy O’Neil’s Weapons of Math Destruction.

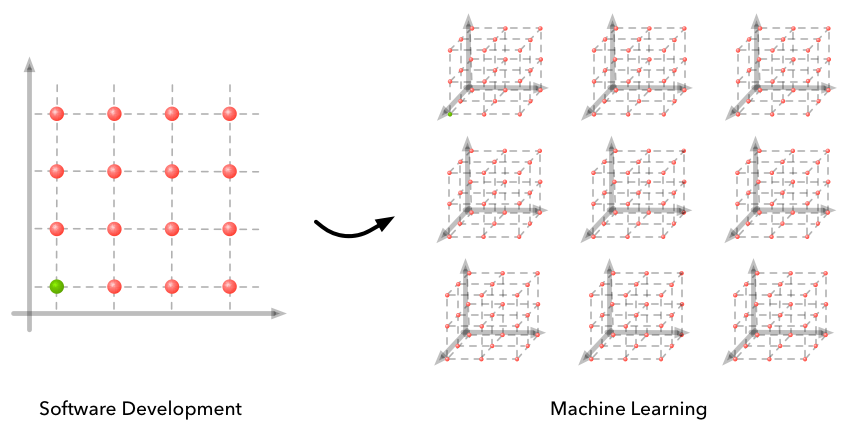

Machine learning often boils down to the art of developing an intuition for where something went wrong (or could work better) when there are many dimensions of things that could go wrong (or work better). This is a key skill that you develop as you continue to build out machine learning projects: you begin to associate certain behavior signals with where the problem likely is in your debugging space.

Are the systems we’ve developed to enhance our lives now impairing our ability to distinguish between reality and falsity?

Guests

Dr Laura D’Olimpio – Senior Lecturer in Philosophy, University of Notre Dame Australia

Andrew Potter – Associate Professor, Institute for the Study of Canada, McGill University; author of The Authenticity Hoax

Hany Farid – Professor of Computer Science, Dartmouth College, USA

Mark Pesce – Honorary Associate, Digital Cultures Programme, University of Sydney

Robert Thompson – Professor of Media and Culture, Syracuse University

This is an interesting episode in regards to augmented reality and fake news. One of the useful points was Hany Farid’s description of machine learning and deep fakes:

When you think about faking an image or faking a video you typically think of something like Adobe Photoshop, you think about somebody takes an image or the frames of a video and manually pastes somebody’s face into an image or removes something from an image or adds something to a video, that’s how we tend to think about digital fakery. And what Deep Fakes is, where that word comes from, by the way, is there has been this revolution in machine learning called deep learning which has to do with the structure of what are called neural networks that are used to learn patterns in data.

And what Deep Fakes are is a very simple idea. You hand this machine learning algorithm two things; a video, let’s say it’s a video of somebody speaking, and then a couple of hundred, maybe a couple of thousand images of a person’s face that you would like to superimpose onto the video. And then the machine learning algorithm takes over. On every frame of the input video it finds automatically the face. It estimates the position of the face; is it looking to the left, to the right, up, down, is the mouth open, is the mouth closed, are the eyes open, are the eyes closed, are they winking, whatever the facial expression is.

It then goes into the sea of images of this new person that you have provided, either finds a face with a similar pose and facial expression or synthesises one automatically, and then replaces the face with that new face. It does that frame after frame after frame for the whole video. And in that way I can take a video of, for example, me talking and superimpose another person’s face over it.

There is a rich design space for interacting with enumerative algorithms, and we believe an equally rich space exists for interacting with neural networks. We have a lot of work left ahead of us to build powerful and trusthworthy interfaces for interpretability. But, if we succeed, interpretability promises to be a powerful tool in enabling meaningful human oversight and in building fair, safe, and aligned AI systems

(Crossposted on the Google Open Source Blog)

In 2015, our early attempts to visualize how neural networks understand images led to psychedelic images. Soon after, we open sourced our code as De…

Google’s caution around images of gorillas illustrates a shortcoming of existing machine-learning technology. With enough data and computing power, software can be trained to categorize images or transcribe speech to a high level of accuracy. But it can’t easily go beyond the experience of that training. And even the very best algorithms lack the ability to use common sense, or abstract concepts, to refine their interpretation of the world as humans do.